SmallDoges /small-doge

Doge Family of Small Language Model

Repository Statistics

Key metrics and engagement data

Timeline

Repository has been active for 5 months

Repository Created

Last ActivityInactive for NaN months

Stars

SmallDoges/small-doge

Want deeper insights? Explore GitObs.com

Languages

README.md

English | 简体中文

SmallDoge: Ultra-Fast Small Language Models

Train a 20M parameter language model in just 3 hours! 🚀

SmallDoge is a family of dynamic, ultra-fast small language models designed for efficiency and accessibility.

✨ Key Features

- 🚀 Ultra-Fast Training: 3-hour training for 20M models

- 💡 Innovative Architecture: Dynamic Mask Attention + Cross Domain MoE

- 🏎️ Lightning Inference: 142 tokens/s on i7-11 CPU

- 🔧 Complete Toolkit: Pre-training → Instruction Fine-tuning → Reasoning Fine-tuning

- 🌐 Web Interface: Built-in chat interface and OpenAI-compatible API

Webui-Doge-320M-Instruct running on i7-11 CPU

🚀 Quick Start

Installation

bash1git clone https://github.com/SmallDoges/small-doge.git2cd small-doge3pip install -e .

Basic Usage

python1from transformers import AutoTokenizer, AutoModelForCausalLM23# Load model4model_name = "SmallDoge/Doge-60M-Instruct"5tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)6model = AutoModelForCausalLM.from_pretrained(model_name, trust_remote_code=True)78# Generate text9prompt = "Explain machine learning in simple terms:"10inputs = tokenizer(prompt, return_tensors="pt")11outputs = model.generate(**inputs, max_length=200, temperature=0.7)12print(tokenizer.decode(outputs[0], skip_special_tokens=True))

Web Interface

bash1# Install WebUI2pip install -e '.[webui]'34# Launch interface5small-doge-webui

Access: http://localhost:7860 (Frontend) | http://localhost:8000 (API)

📖 Detailed guides: Quick Start | Installation | Training

📊 Available Models

| Model | Size | Speed (i7-11 CPU) | MMLU | Use Case |

|---|---|---|---|---|

| Doge-20M | 20M | 142 tok/s | 25.4 | Ultra-fast prototyping |

| Doge-60M | 60M | 62 tok/s | 26.4 | Balanced performance |

| Doge-160M | 160M | 28 tok/s | 29.2 | Better reasoning |

| Doge-320M | 320M | 16 tok/s | 33.8 | Production ready |

Instruction Models: Add -Instruct to any model name for chat-optimized versions.

Checkpoints: Add -checkpoint for continued training (see Model Docs).

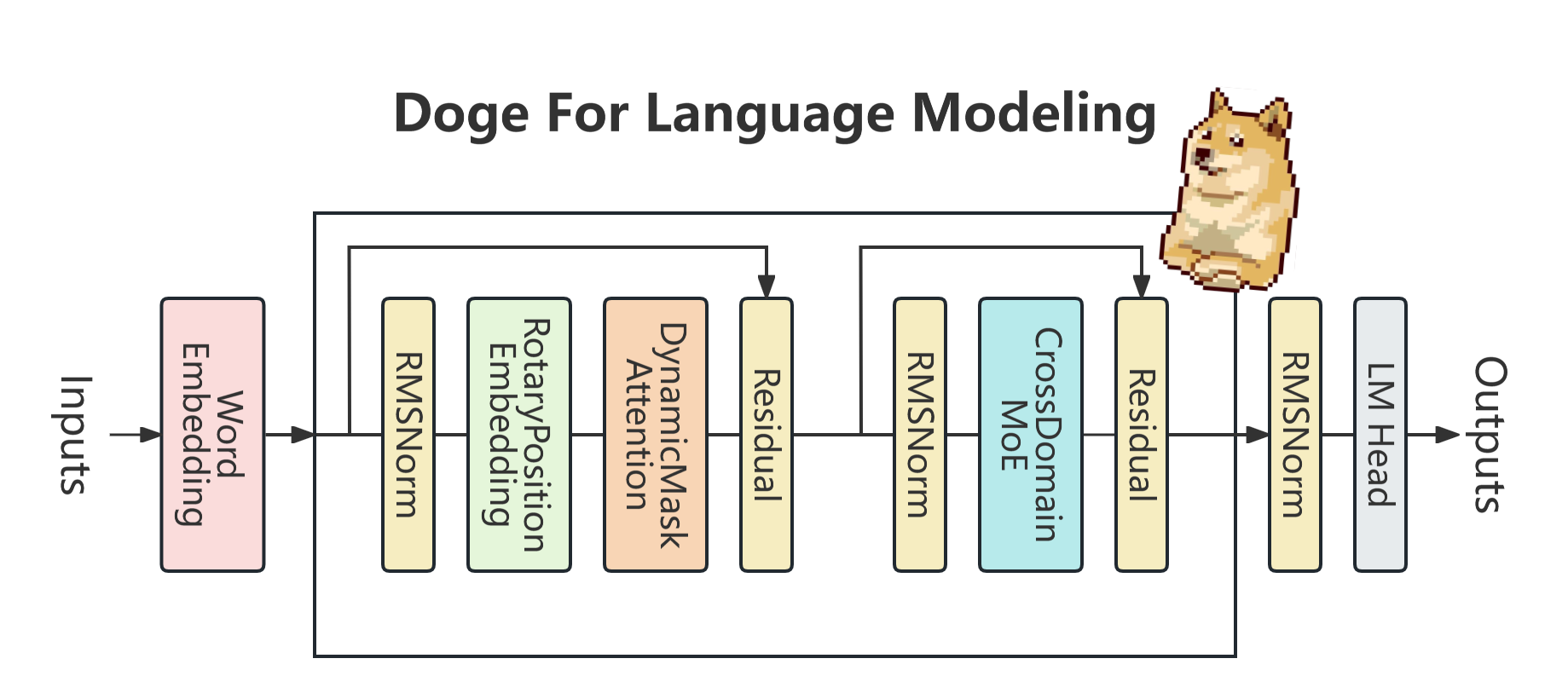

🏗️ Architecture

Key Innovations:

- Dynamic Mask Attention: Dynamic attention mechanism for efficient long sequences

- Cross Domain Mixture of Experts: Sparse experts with dense-to-sparse continuation training

- WSD Scheduler: Warmup-Stable-Decay for seamless checkpoint resumption

🎓 Training Pipeline

SmallDoge supports complete three-stage training:

- Pre-training → Base models (Doge-Base)

- Instruction Fine-tuning → Chat models (Doge-Instruct)

- Reasoning Fine-tuning → Reasoning models (Doge-Reason)

Key Features:

- 🚀 One-stop processor: Unified data handling across all stages

- 🔧 Flexible recipes: Pre-configured training configs

- 📊 Efficient training: Optimized for small models

- 🔄 Seamless continuation: WSD scheduler for checkpoint resumption

Training Times (RTX 4090):

- Doge-20M: 14 hours | Doge-60M: 128 hours | Doge-160M: 522 hours | Doge-320M: 1856 hours

📚 Learn more: Training Guide

📈 Evaluation Results

Base Models

| Model | MMLU | ARC | PIQA | HellaSwag | Winogrande |

|---|---|---|---|---|---|

| Doge-20M | 25.4 | 29.8 | 58.4 | 27.3 | 50.2 |

| Doge-60M | 26.4 | 37.9 | 61.4 | 31.5 | 50.8 |

| Doge-160M | 29.2 | 44.4 | 70.1 | 43.4 | 52.2 |

| Doge-320M | 33.8 | 52.1 | 73.9 | 52.7 | 55.0 |

Instruction Models

| Model | IFEval | MMLU | BBH | Performance |

|---|---|---|---|---|

| Doge-20M-Instruct | 7.3 | 26.3 | 18.3 | Good for basic chat |

| Doge-60M-Instruct | 7.4 | 27.5 | 27.7 | Balanced chat model |

| Doge-160M-Instruct | 16.8 | 29.7 | 29.1 | Advanced reasoning |

🔍 Evaluation toolkit: Evaluation Guide

🛠️ Use Cases

- 🤖 Edge AI: Deploy on resource-constrained devices

- 🎮 Gaming: Real-time NPC dialogue and game mechanics

- 📱 Mobile Apps: On-device AI assistants

- 🔬 Research: Fast prototyping and experimentation

- 📚 Education: Learning AI/ML with manageable models

- 🏭 Industry: Lightweight production deployments

📦 Project Structure

1small-doge/2├── src/small_doge/ # Core implementation3│ ├── models/ # Model architectures4│ ├── trainer/ # Training code5│ ├── processor/ # Data processing6│ └── webui/ # Web interface7├── recipes/ # Training recipes8│ └── doge/ # Doge model configs9├── examples/ # Tutorials & examples10├── evaluation/ # Evaluation toolkit11├── docs/ # Documentation12└── assets/ # Images & resources

🤝 Contributing

We welcome contributions! Here's how you can help:

- 🐛 Report bugs: GitHub Issues

- 💡 Suggest features: Discussions

- 📚 Improve docs: Submit PRs for documentation

- 🏋️ Share models: Contribute trained models and recipes

- 💬 Join community: Discord

📚 Documentation

- 📖 Quick Start - Get started in 5 minutes

- ⚙️ Installation - Detailed setup guide

- 🗃️ Dataset Processors - Data processing utilities

- 🎓 Training - Complete training pipeline

- 🤖 Models - Architecture and performance

- 🌐 WebUI - Web interface guide

- 🔧 Examples - Jupyter notebooks and tutorials

- 📊 Evaluation - Benchmarking toolkit

📄 Citation

bibtex1@misc{smalldoges2025,2 title={SmallDoges: A Family of Dynamic Ultra-Fast Small Language Models},3 author={Jingze Shi and Yifan Wu and Bingheng Wu and Yuyu Luo},4 year={2025},5 month={March},6 url={https://github.com/SmallDoges/small-doge}7}

📄 License

This project is licensed under the Apache-2.0 License - see the LICENSE file for details.